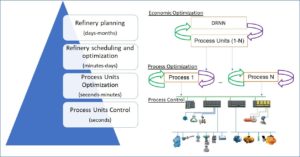

The purpose of Modcon-AI package is to provide process engineers with set of modern optimization tools, which enables connectivity, validation and prediction of main KPIs, to take the correct decisions to maintain and improve effective industrial processes management. The implemented in this solution artificial neural network (NN) dynamic models enables to calculate and predict physical properties and chemical compositions for different process streams, and proposes required set points, that will accomplish the calculated predictions.

Deep Reinforcement Neural Network (DRNN) is a powerful machine learning technique, which can be effectively used to optimize industrial processes for different strategic goals, allowing to shift focus intelligently and confidently. DRNN algorithm is a long-standing machine learning framework with close association of optimal control. The mechanism of DRNN can be summarized as an agent seeks an optimal policy by interacting with its environment through feedback between observation states and quantified rewards, which can be traced back to a Markov Decision Process (Sutton, 1998).

Deep Reinforcement Neural Network (DRNN) is a powerful machine learning technique, which can be effectively used to optimize industrial processes for different strategic goals, allowing to shift focus intelligently and confidently. DRNN algorithm is a long-standing machine learning framework with close association of optimal control. The mechanism of DRNN can be summarized as an agent seeks an optimal policy by interacting with its environment through feedback between observation states and quantified rewards, which can be traced back to a Markov Decision Process (Sutton, 1998).

Reinforcement learning makes use of algorithms that do not rely only on historical data sets, to learn to make a prediction or perform a task. Just like we humans learn using trial and error, these algorithms also do the same. A reinforcement learning agent is given a set of actions that it can apply to its environment to obtain rewards or reach a certain goal. These actions create changes to the state of the agent and the environment. The DRNN agent receives rewards based on how its actions bring it closer to its goal.

DRNN agents can start by knowing nothing about their environment and selecting random actions. Because reinforcement learning systems figure things out through trial and error, it works best in situations where an action or sequence of events is rapidly established, and feedback is obtained quickly to determine the next course of action — there is no need for reams of historical data for reinforcement learning to crunch through. That makes DRNN perfectly suitable for hydrocarbons processing optimization tasks using established metrics in the form of inputs, actions, and rewards.

Being a powerful tool, which requires no historical data for reinforcement learning, DRNN has one significant weakness, which makes it more difficult to implement for hydrocarbons processing with wide ranges of operation. DRNN is likely to improve performance only where the pre-trained parameters are already close to yielding the correct process steams quality. The observed gains may be due to effects unrelated to the training signal, but rather from changes in the shape of the distribution curve. Therefore, there is a need in real-time monitoring of process yields, rather than only their prediction using the pre-trained models. This can be reached using the on-line analyzers installed in the process to determine the chemical composition or physical properties of substances involved in hydrocarbons processing.

The Beacon 3000 is an inline, multi-channel Process NIR Analyzer. It enables non-contact, real-time monitoring and closed-loop control of physical properties and chemical composition in industrial process applications. Based on novel algorithms, the Beacon 3000 measures the absorption spectrum in the near infrared (NIR) fast and accurately without labor and material waste. Up to 8 field units, which uses no electricity and contains no moving parts, can be connected to one Main Analyzer. When integrated into the DRNN dynamic model, the Beacon 3000 enables tighter process optimization and identifies process excursions before they affect yield. The incorporated in analyzer Freetune™ software compares its measurements with laboratory test results, on a constant base. In case of a continuous deviations, Freetune™ will automatically correct the analyzer predicted analytical results to ongoing comply laboratory test results.

DRNN dynamic model is getting real-time data from process analyzers, which is verified and validated against the laboratory results and predicted products quality. The simulated process’ “digital twin” is continuously updated by process analyzers to allow highest possible efficiency of the process at lowest cost. This method enables global process optimization through integration of the network input and target KPIs, using DRNN dynamic modelling to maximize the overall profit and reduce environmental impact.